TL;DR

JTBD stands for Jobs To Be Done. This method helps us build products around the progress people want to make.

We focus on customer jobs, pains, and outcomes instead of features. This keeps strategy and discovery work aligned.

Here’s how it goes: we interview customers, map their jobs, write job stories, size up opportunities, prioritize, ship, and then measure results.

JTBD helps us write better requirements, build stronger roadmaps, and sharpen messaging. Our value props become jobs-based, not just feature lists.

If you’re starting out, try 5-8 interviews, one job cluster, and one outcome to improve.

What “JTBD” stands for (and what it really means)

JTBD means Jobs to be Done. This framework helps us see what job a user “hires” our product to do.

The jobs to be done theory is about the progress users want. It digs into their situation and the outcomes they care about.

A job is the progress someone tries to make in a specific situation. They face constraints and want to reach a certain result.

JTBD flips how we think about products. Instead of “Which features should we build?” we ask, “What progress do users want?”

This fits right into product strategy. It helps us during discovery before we move to delivery and go-to-market.

Why JTBD matters

JTBD keeps us focused on real customer needs instead of features we think are cool. We can build better roadmaps by tying our work to outcomes that actually matter.

It also makes our product messaging sharper at launch. We get what customers are really trying to achieve.

The JTBD methodology (light but effective)

1) Collect stories (interviews)

We talk to recent adopters, switchers, and even non-adopters. These chats reveal real customer jobs.

We dig for specific moments. Ask, “When did you realize you needed something?” or “What else did you try?” These questions surface actual triggers.

We capture the story flow: context → struggle → workaround → outcome → trade-offs. We focus on what people do, not just what they say.

2) Map the job

Most customer jobs follow a basic flow:

Define goal → Find options → Prepare/setup → Execute → Monitor & adjust → Conclude & follow-up

This map shows us where customers struggle most.

3) Write job stories

We use a simple format: When [situation], I want to [progress], so I can [outcome].

We add constraints like time, place, device, and stakeholders. Success signals show what “good” looks like.

4) Cluster jobs & outcomes

We group similar stories. We separate outcomes into three types:

- Functional: get X done

- Emotional: feel safe or confident

- Social: look competent

5) Size opportunities

We look for outcomes that are important but underserved. Quick surveys and analytics help us spot gaps—like drop-offs or repeat workarounds.

6) Prioritize & test

We turn top job and outcome pairs into experiments. We tie them to real metrics.

7) Ship → measure → iterate

We set up funnels and outcomes. Lightweight decision records keep learning visible.

Quick examples (B2C & B2B)

Take a B2C ride-hailing case study. Late-night riders want safe, hassle-free trips home from unfamiliar places.

This leads to requirements like:

- Precise pickup location pins

- “Quiet ride” options

- Trip sharing for safety

- Saved destination features

We measure success using pickup speed, customer satisfaction, and repeat usage.

Now, a B2B analytics platform case study. When auditors need 12-month data trails, users must export verified events quickly with the right access context.

Key features:

- Tamper-proof activity logs

- Export templates ready to go

- Role-based data filters

- Guaranteed export timeframes

We track report generation time and support tickets during audits. These examples show how user goals shape features and success metrics.

How to implement JTBD in product management (day-to-day)

We add a JTBD block to our Interview Guide and Research Plan. We swap in question templates from our library.

In the PRD Problem section, we write the primary job story and target outcome metric. We keep acceptance criteria tied to that outcome, not just UI details. Delivery pages follow this: problem → stories → acceptance criteria → analytics → rollout.

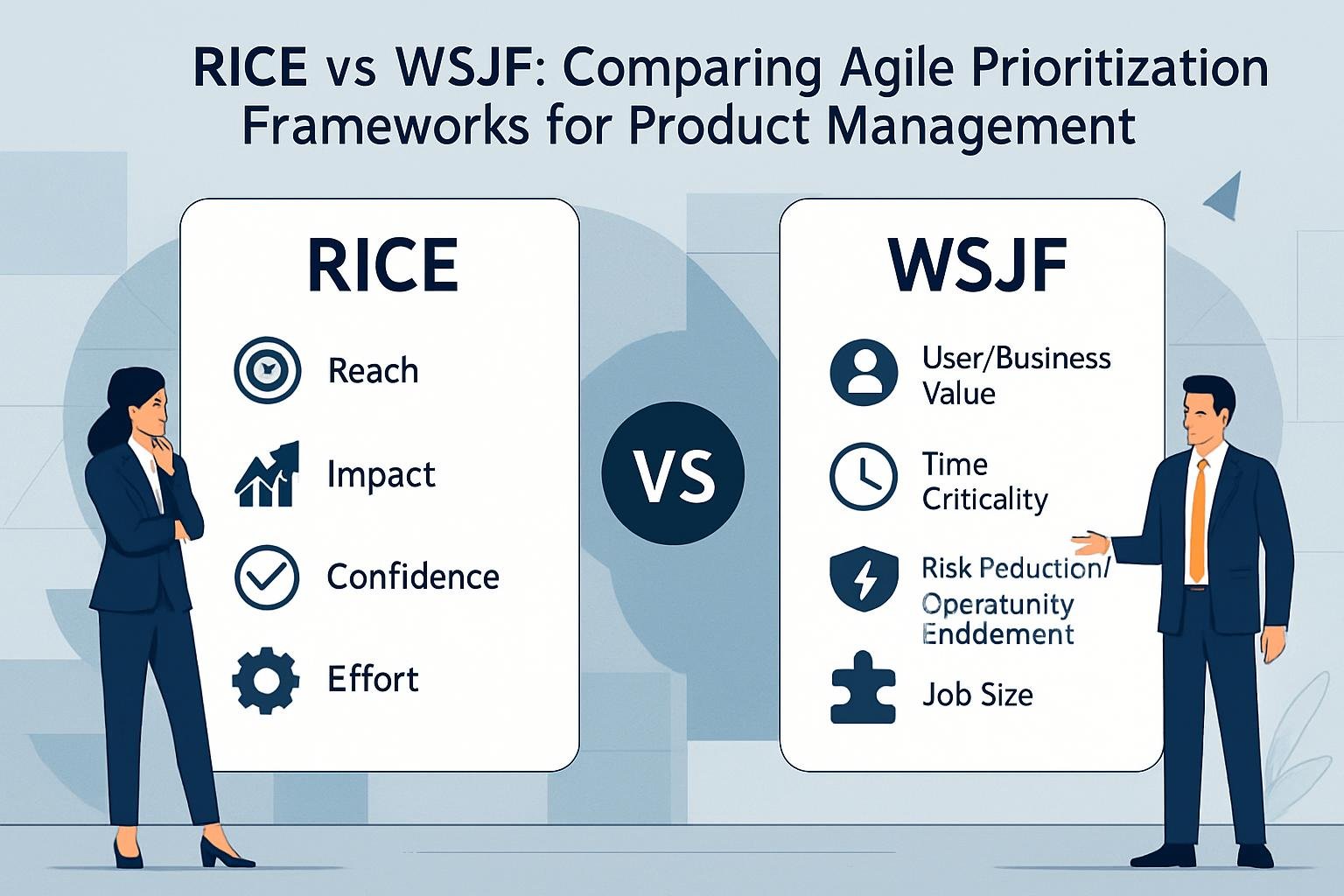

We score roadmap items by their impact on the job’s outcome. This fits into RICE or WSJF with effort and risk. Our roadmap stays linked to outcomes.

For launch messaging, we use jobs-based value props in our messaging house and sales materials. That means battlecards and ROI calculators too.

How to implement JTBD in project management (delivery ops)

We call a project complete when the job story gets satisfied and outcome metrics move the right way. We update enablement docs too.

Change management steps:

- Train support teams on job flows

- Train sales teams on before/after processes

- Update operations docs

- Prep go-to-market materials

Starter toolkit (copy-ready)

JTBD interview guide (one-pager)

This guide helps you structure customer interviews to get to job requirements.

Warm-up: Tell me about the last time you…

Trigger: What happened that made this a priority?

Searching: What did you try first? What else?

Hiring: Why choose X? What nearly stopped you?

Using: What’s awkward today? What does “good” look like?

Outcome: If this went perfectly, what would be true?

Job story template

Use this template to frame customer needs:

Primary: When [situation], I want to [progress], so I can [outcome].

Constraints: [time, location, device, security, approvals]

Signals & metrics: [leading/lagging, guardrails]

Alternatives considered: [workarounds, competitors, “do nothing”]

Opportunity canvas (mini)

This canvas maps opportunities from research to action.

Job cluster → underserved outcomes → metrics → assumptions → smallest test → owner → decision date.

Use your Experiment Brief or Decision Log to track progress step by step.

Common pitfalls (and how to dodge them)

We often confuse feature lists with actual jobs. Keep asking “so you can…?” until you hit the real outcome.

Don’t assume one persona equals one job. The same person has different jobs in different contexts.

Don’t measure UI clicks instead of outcome movement. Tie analytics to real progress—like time saved or success rates.

Books to learn more (shortlist)

Start with Competing Against Luck by Clayton Christensen for the core theory. Jobs to Be Done: Theory to Practice by Tony Ulwick gives a more structured approach.

The Jobs To Be Done Playbook by Jim Kalbach has practical exercises. When Coffee and Kale Compete by Alan Klement digs into customer motivation.

Demand-Side Sales 101 by Bob Moesta ties jobs theory to sales strategy in a way that’s actually useful.

FAQ (quick hits)

What is JTBD in one sentence? It’s a way to design around the progress users want in their real context, not just around product features.

How is JTBD different from personas? Personas describe who your users are. JTBD digs into why they act and what progress they’re after.

Honestly, you should use both. Your strategy pages actually pair JTBD with personas and archetypes.

Where does JTBD live in our process? You’ll find it across Strategy, Discovery, Delivery, and Go-to-Market phases.

JTBD shapes research, PRDs, roadmaps, and even messaging.

How do we know a job is real? You can hear about a recent trigger, spot a workaround, or measure a clear outcome.

What metric should we attach to the job? Pick a metric the user actually feels. Think time-to-complete, error rate, or confidence level.

Your growth and analytics pillars list some common frameworks if you want to dig deeper.

Can JTBD work for legacy products? Absolutely. Sometimes, the job is all about compatibility and reliability.

Just focus on outcomes that keep customers running and help them pass audits.